Migrating Existing ML Models To Fabric Data Science

Importing and registering existing ML models to Fabric model registry

Table of contents

In the last blog, I showed how you can load ML models for scoring in Fabric. The assumption was you already have an ML model registered in the Fabric model registry. What if you have existing serialized models from another platform (e.g. AzureML, Databricks) that you want to migrate to Fabric? Currently, there are no options available yet to do this using the GUI. This will change in the future, but for now, below are two scenarios based on whether you have just the pickle file or the MLmodel format containing the pickle file along with other model artifacts (conda.yaml, model metadata etc.)

The focus of this blog is to migrate the models and the related artifacts, not the entire tracking store with artifacts. If that's what you are looking for, look into mlflow-export-import Python library.

Serialized Model

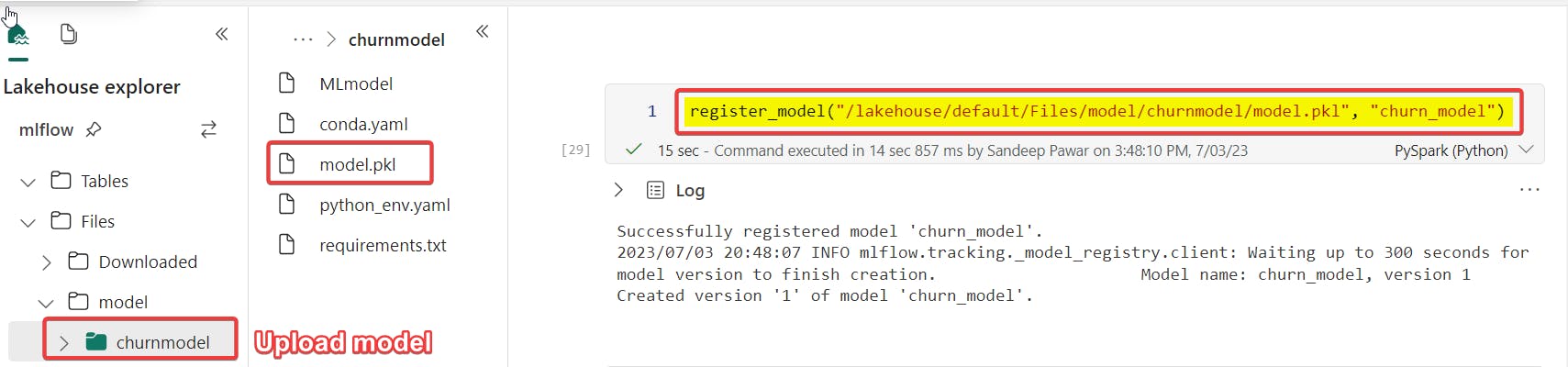

Below are the steps and the code if you have just the pickle (or any other supported serialized format) file.

Steps:

Save your serialized models to a folder.

Upload the models to a folder in the Files section of the lakehouse. Mount the lakehouse in the notebook.

In the Fabric notebook, use the below function to create MLflow run and register a Scikit-Learn model. You will need to provide three parameters:

model_file_path : This is the .pkl location using the Files API

/lakehouse/default/Files/<folder>/<model>.pklmodel_name : name of your model

artifact_location : This is optional. This is the location under runs where model will be saved. If you don't specify anything, it will be saved under

imported_model

Note that the function below assumed a sklearn model. You can register other model flavors the same way. Refer to MLFlow documentation.

import cloudpickle import mlflow from mlflow.tracking import MlflowClient from pathlib import Path def register_model(model_file_path: str, model_name: str, artifact_path: str = "imported_model") -> None: """ Author : Sandeep Pawar | fabric.guru | July 3, 2023 Register a model in the Fabric MLflow model registry. This function will log the model as an artifact within an MLflow run and then register the model in the Fabric MLflow model registr with given name. Parameters ---------- model_file_path : str The path to the file containing the model to be registered. model_name : str The name to be given to the registered model. artifact_path : str, default "imported_model" The path where the model will be stored as an artifact within the run. Returns ------- None Raises ------ FileNotFoundError If the provided model_file_path does not exist. Examples -------- >>> register_model("/lakehouse/default/Files/<folder>/<model>.pkl", "churn_model","marketig_imported_model") """ model_path = Path(model_file_path) if not model_path.exists(): raise FileNotFoundError(f"No file found at provided model path: {model_file_path}") # Load the model with model_path.open("rb") as f: model = cloudpickle.load(f) # Start a new MLflow run with mlflow.start_run() as run: # Log the model as an artifact within the run mlflow.sklearn.log_model(model, artifact_path=artifact_path) # Register the model in the MLflow model registry mlflow_model_uri = f"runs:/{run.info.run_id}/{artifact_path}" mlflow.register_model(model_uri=mlflow_model_uri, name=model_name)Example:

If you need to include a signature, register the model and follow this example.

If you have many models, you can create a dictionary with the model name and file path and iterate over it using the above function.

Note that if the model is stored at a remote location but is accessible in Fabric notebook (blob storage, S3 etc.) via API, you can skip step 2 and provide the remote path directly.

Check the workspace or use the function I shared in the last blog to get a list of all the registered models.

Always backup and test. Load the newly registered model and create predictions to ensure it's working as expected.

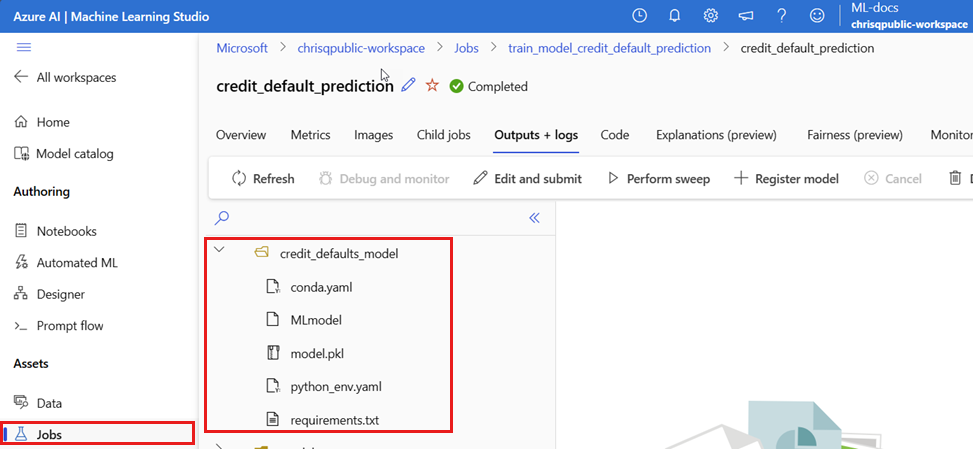

MLmodel Format

MLflow adopts the MLmodel format as a way to create a contract between the artifacts and what they represent. The MLmodel format stores assets in a folder. Among them, there is a particular file named MLmodel. This file is the single source of truth about how a model can be loaded and used [1]. Below is a screenshot from AzureML.

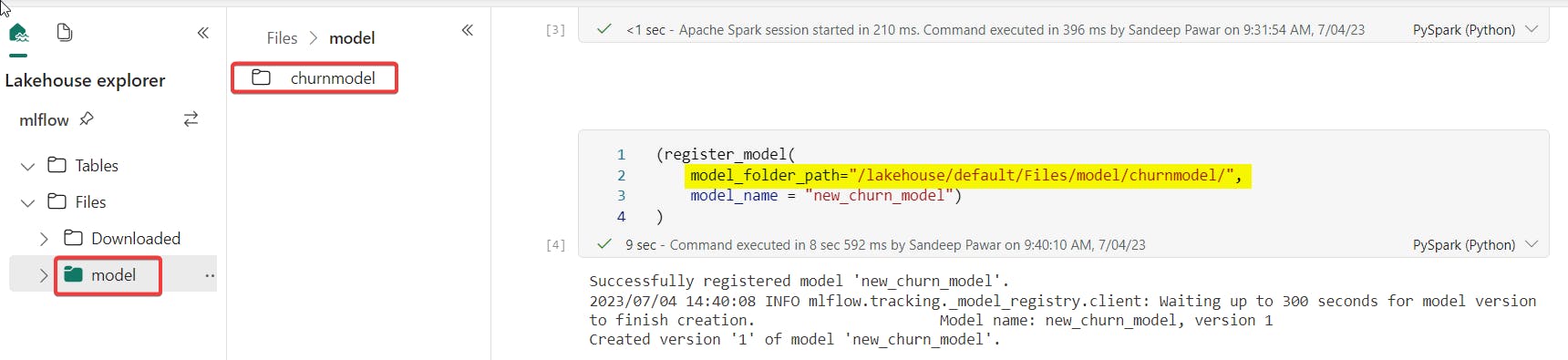

Steps:

The steps are similar to those above, but instead of uploading a single model, you will upload the existing MLFlow model folder containing:

MLModel : model metadata file

model.pkl : serialized model

conda.yaml : conda environment

Use the below function. Instead of the location of the pickle file, provide the folder path containing the above three artifacts. Note that the folder path is in File API format i.e.

"/lakehouse/default/Files/<mlflow_folder>/"After registering to the model registry, it takes a few minutes for it to show up in the workspace.

import cloudpickle

import mlflow

from mlflow.tracking import MlflowClient

from pathlib import Path

def register_model(model_folder_path: str, model_name: str, artifact_path: str = "imported_model") -> None:

"""

Author : Sandeep Pawar | fabric.guru | July 4, 2023

Register an mlflow model folder to Fabric model registry.

This function will log the model as an artifact within an MLflow run

and then register the model in the Fabric MLflow model registr with given name.

The specified folder must have model as model.pkl, environment as conda.yaml

and the MLmodel file which contains the model metadata.

Parameters

----------

model_folder_path : str

The lakehouse File path to the folder containing the mlflow model artifacts.

model_name : str

The name to be given to the registered model.

artifact_path : str, default "imported_model"

The path where the model will be stored as an artifact within the run.

Returns

-------

None

Raises

------

FileNotFoundError

If the provided model_folder_path does not exist.

Examples

--------

>>> register_model("/lakehouse/default/Files/<mlflow_folder>/", model_name="churn_model")

"""

model_fpath = Path(model_folder_path)

if not model_fpath.exists():

raise FileNotFoundError(f"Folder not found at provided path: {model_folder_path}. Check if Lakehouse has been mounted.")

model_path = Path(model_folder_path,"model.pkl")

conda_path = str(Path(model_folder_path,"conda.yaml"))

mlmodel_path = str(Path(model_folder_path,"MLmodel"))

# Load the model

with model_path.open("rb") as f:

model = cloudpickle.load(f)

with mlflow.start_run() as run:

(mlflow

.sklearn

.log_model(sk_model = model

, artifact_path = artifact_path

, registered_model_name = model_name

, conda_env = conda_path

, metadata = mlmodel_path

)

)

Example:

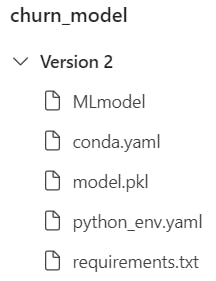

Note that in the code above, I did not log any additional model artifacts (charts, files, signatures etc.). If you have any additional files that you need to log, modify the above code. Also note that after registering the model for the first time, it will be registered with version 1. If you want to change the model version to reflect the correct version, update the model version using this example.

As I mentioned above, uploading a model directly using the UI may be possible in the future as Fabric progresses towards GA but for mass importing and registering the models, above code would still be helpful.

Third scenario, which I won't cover, is custom models. Since Fabric uses MLflow model registry, you need to register the model with MLmodel format. Follow this article for the steps to do so.

In the next blog, I will show how to download models registered in the Fabric model registry.