Recursively Loading Files From Multiple Workspaces And Lakehouses in Fabric

Using spark to load files from multiple workspaces and lakehouses

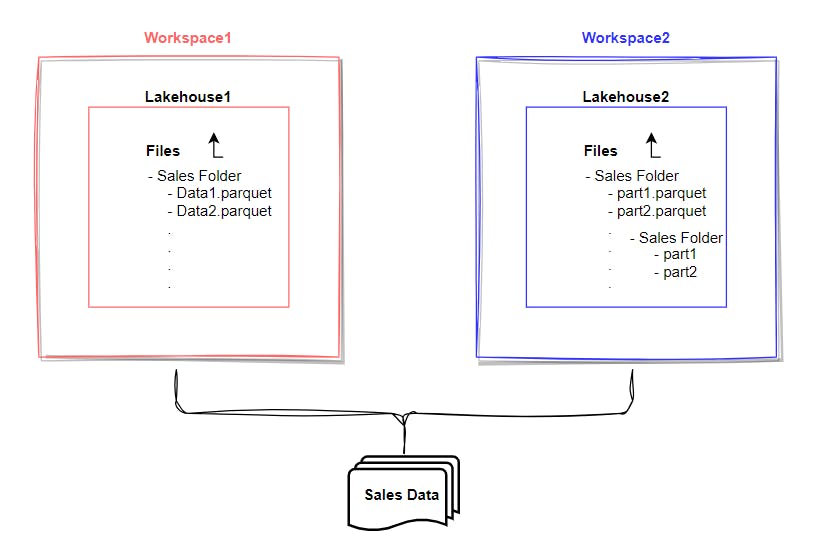

Imagine a scenario in which you are collaborating with two colleagues who have stored sales data in two separate lakehouses, each within a different workspace and under distinct names but same schema. The data is saved in folders containing numerous parquet files. One colleague saved the parquet files with names starting with "data-", while the other used Spark, which typically saves files with names beginning with "part-". Your task is to read this data in a notebook for further analysis.

One obvious solution is to read the data individually and union the multiple spark dataframes. An easier and more flexible option, without mounting those lakehouses, in the notebook is to use the abfs paths and a couple of spark options to recursively load folders with wildcards.

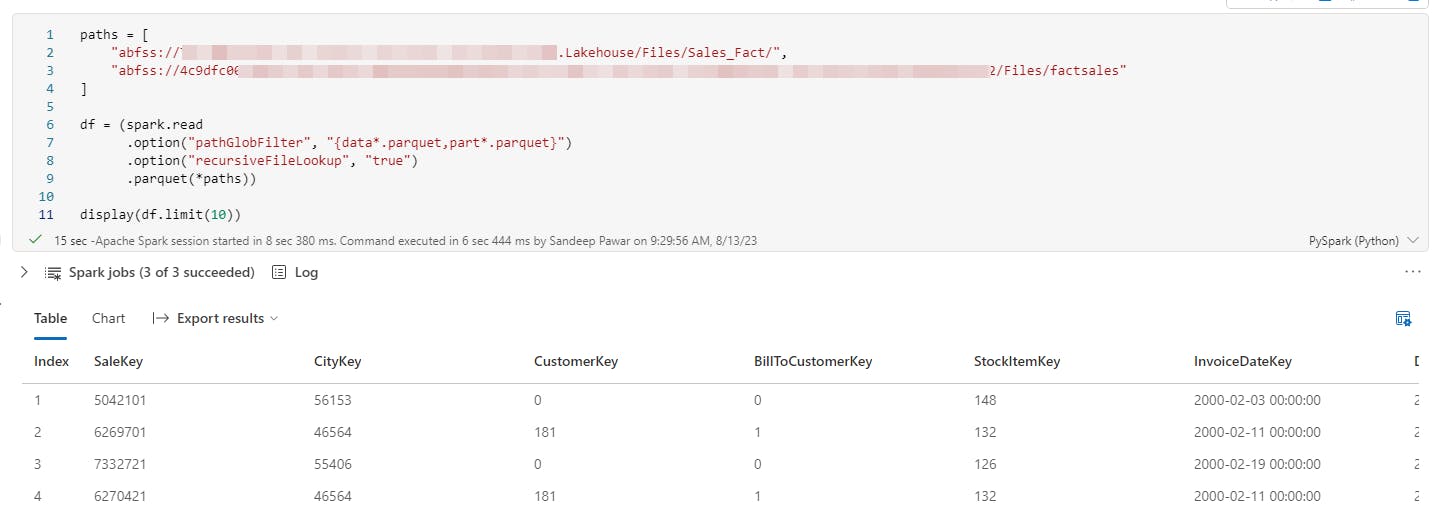

paths = [

"abfss://<workspace1_id>@onelake.dfs.fabric.microsoft.com/<lakehouse2_id>/Files/<folder_name>/",

"abfss://<workspace2_id>@onelake.dfs.fabric.microsoft.com/<lakehouse2_id>/Files/<folder_name1>/<folder_name2>"

]

df = (spark.read

.option("pathGlobFilter", "{data*.parquet,part*.parquet}") # only files starting with data or part

.option("recursiveFileLookup", "true") #recursively load from nested sub folders

.parquet(*paths))

In the above code, paths are the abfs paths of the folders where the files are saved. In the pathGlobFilter option, I provided a dictionary to only load files that start with data or part. recursiveFileLookup option looks for files within subfolders under the specified folder. Setting recursiveFileLookup to false will only load files from the top-level folder.

You can also create shortcuts to these folders in a new lakehouse, but it still won't solve the problem of loading files from nested subfolders. By using the approach mentioned above, you can provide relative paths instead of abfs paths in the paths list.

ABFS paths for folders can be found by going to the respective lakehouse > Properties