Synthetic Streaming Data Generation For Real Time Analytics in Fabric

Generating Fraudulent Call Data to send to Fabric Eventstream for RTA

If you want to learn or demo Real Time Analytics in Microsoft Fabric, you will need a streaming data source. You can use the built-in samples to get started. But there are several data generators which you can use to create custom streaming sample datasets, Azure Stream Analytics data generator being one of them. You can see them here. In this blog, I will show how to set one up to use with Fabric Eventstream.

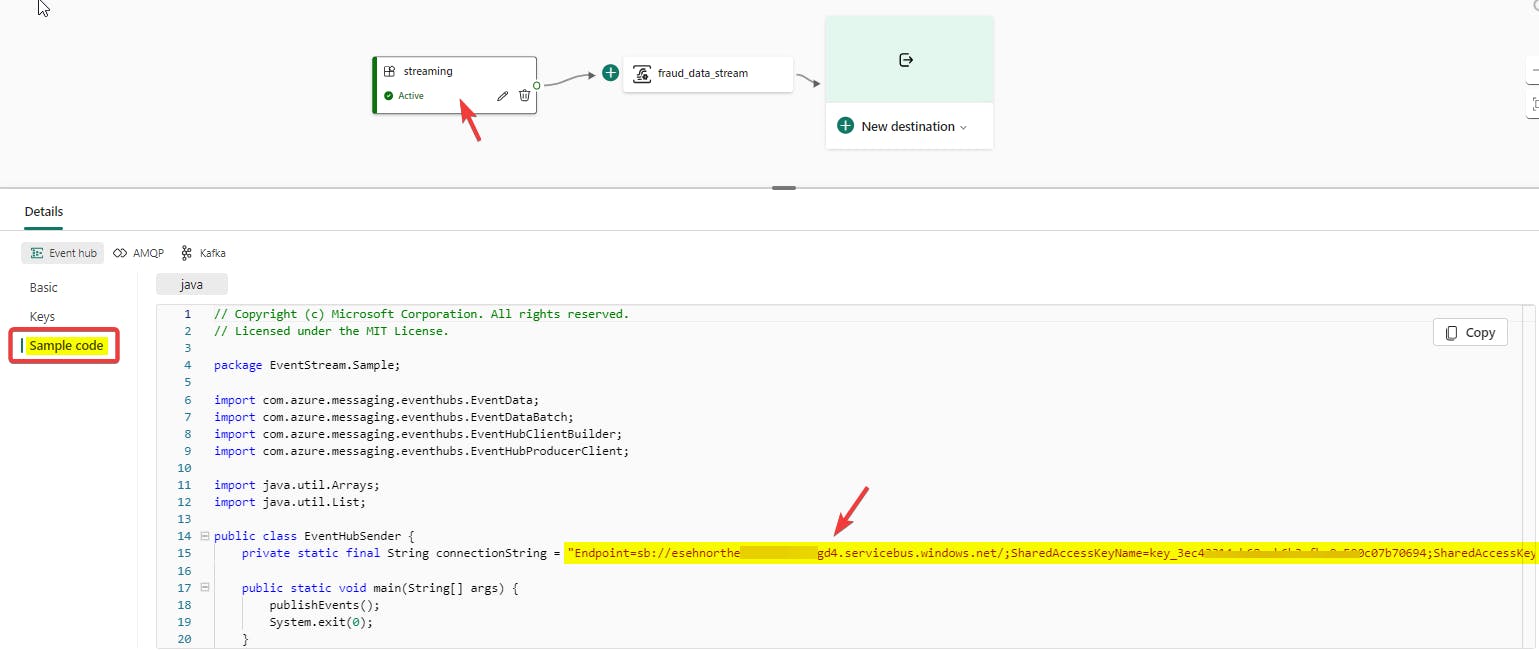

- Create an Evenstream in Fabric Real Time Analytics workload

Click on New source and create a custom app

Select the custom app and copy the Event hub connection string. The connection string will be of the form:

"Endpoint=sb://<>.servicebus.windows.net/;SharedAccessKeyName=<key_name>;SharedAccessKey=<key>;EntityPath=<path>"

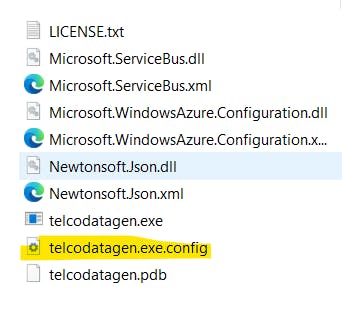

Download the Fradulent Call Data generator from here and save it to your local machine.

In the downloaded zip file, you will find telcodatagen.exe.config file. Open it in an editor (Notepad or VS Code)

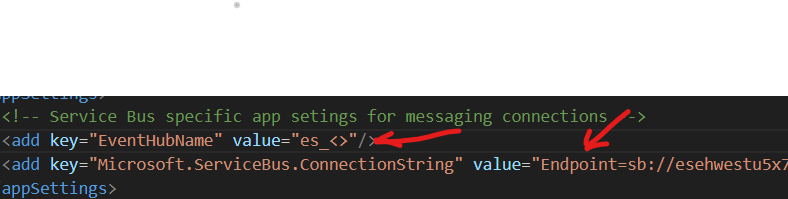

In the config file, you need to change two app configurations

EventHubNameandMicrosoft.ServiceBus.ConnectionStringvalues.

EventHubName: You can either get it from the Eventstream app settings under Keys or from the connection string copied in step 3. In the connection string copied in step 3, EventHubName is the value of the EntityPath starting with

"es_".

Connection String: Edit the connection string copied in step 3 to delete the EntityPath. Connection string starts with

"Endppoint=sb://es<>". You also need to remove the semi-colon (;) before the EntityPath so that the connection string ends with =". Your final connection string should look like :"Endpoint=sb://<>.servicebus.windows.net/;SharedAccessKeyName=<key_name;SharedAccessKey=<key>="Save the file and browse to the folder location in command prompt

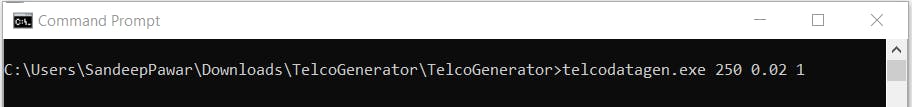

cd folder location. To generate 250 records per hour with 2% fraudulent calls for 1 hour, usetelcodatagen.exe 250 0.02 1. This will start streaming the synthetic data to the event hub created above. To cancel the stream, press CNTRL + C.

You should now see data streaming into the event hub and you can save this data to the destination of your choice (Lakehouse, KQL DB, custom app, Reflex) for further analytics !