🚀Lightning Fast Copy In Fabric Notebook

A new addition to mssparkutils to copy files from ADLSg2 to lakehouse

If you are familiar with mssparkutils , you know that it is packed with utilities to perform common notebook tasks such as getting a list of files and folders, mount points, copying files, running notebooks etc. in Fabric. The Fabric notebook team has been adding new tools to mssparkutils to accelerate development. One such recently added method is fastcp which as the name suggests is similar to the existing method cp to copy files but it's orders of magnitude faster.

fastcp is a Python wrapper to azcopy which means you can also use the configurations provided by azcopy with the flexibility of Python.

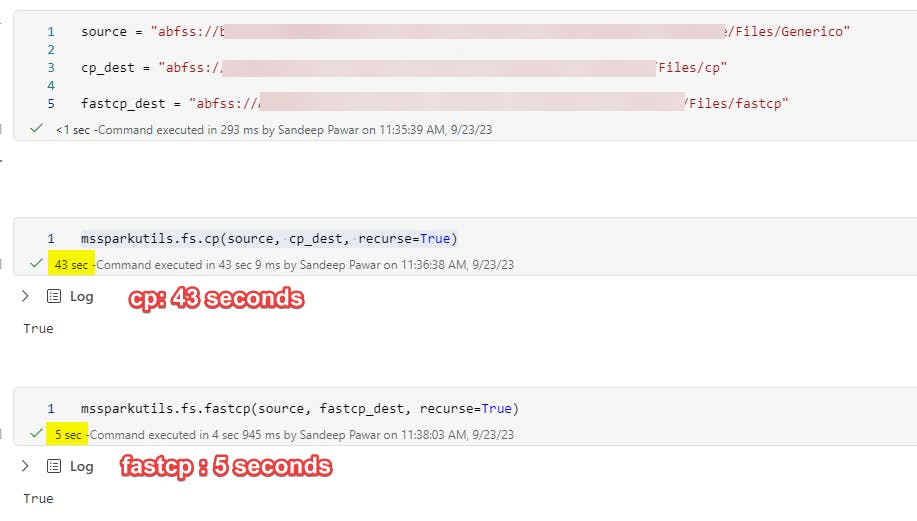

For demonstration, I am copying 4.5GB folder from one lakehouse to another. I provide the abfss paths for the source and destination folders in the two lakehouses and use cp and fastcp as follows:

source = "abfss://xxxxxx/Files/Generico"

cp_dest = "abfss://xxxxxx/Files/cp/Generico"

fastcp_dest = "abfss://xxxxxx/Files/fastcp/Generico"

#copy using cp

mssparkutils.fs.cp(source, cp_dest, recurse=True)

# copy using fastcp

mssparkutils.fs.fastcp(source, fastcp_dest, recurse=True)

cp took 43 seconds to copy the data, fastcp finished the job in 5 seconds !!! 9x speedup.

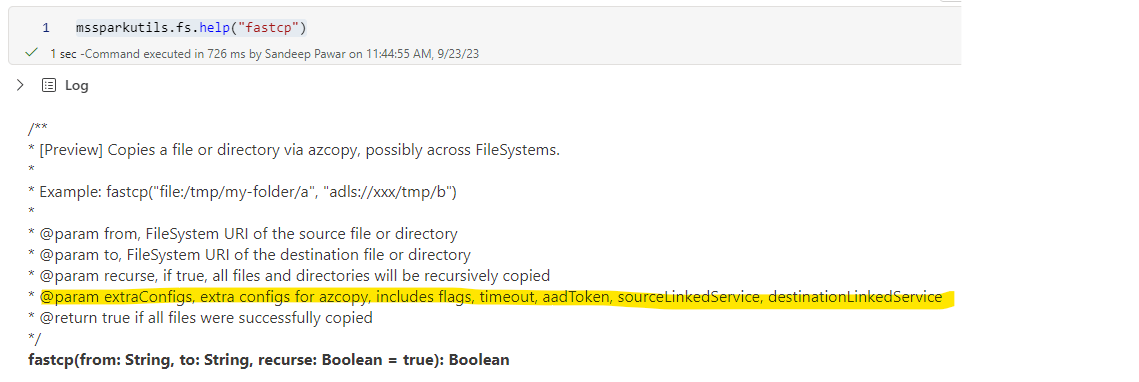

To learn more about fastcp , you can get help using mssparkutils.fs.help("fastcp")

You can specify additional copy options using the extraConfig parameter. All options supported by azcopy can be specified in a dictionary. For example, I used mssparkutils.fs.fastcp(source, fastcp_dest, recurse=True, extraConfigs={"flags": "--dry-run=true"}) to simulate a dry-run to make sure the copy runs successfully without actually copying the data. You can pass additional parameters as extraConfigs={"flags": "--dry-run=true --overwrite"}

Versatility of cp

Although copying files from ADLSg2 to the lakehouse is faster with fastcp , cp is a great method for copying files from a variety of sources and filesystems that fastcp doesn't support. For example,

Blob store

blob_source = 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/bing_covid-19_data/latest/bing_covid-19_data.parquet'

lh_dest = "abfss://<ws>@onelake.dfs.fabric.microsoft.com/<lh>.Lakehouse/Files"

mssparkutils.fs.cp(blob_source, lh_dest, recurse=True)

Github

github_source = 'https://media.githubusercontent.com/media/datablist/sample-csv-files/main/files/customers/customers-100.csv'

lh_dest = "abfss://<ws>@onelake.dfs.fabric.microsoft.com/<lh>.Lakehouse/Files"

mssparkutils.fs.cp(github_source, lh_dest, recurse=True)

You can pretty much replace wget with cp todownload and copy files to Fabric lakehouse.

Keep an eye on the official documentation to learn more.

I want to thank Jene Zhang, Yi Lin, Fang Zhang and Tiago Rente from Microsoft for the information.